INNOVATIONS

Engineering AI to Give Speech to the Vocally Stricken

Innovations & Impacts

Human voice is one of the most unique gifts for human beings, whereas one may lose his/her distinctive voice which can be caused by stroke, head and neck cancers, neurological disorders and many other diseases. AI voices such as Siri and Alexa may help you with translation and even chat with you. But what differentiates humans from robots or artificial intelligence (AI) is the ability to communicate, generating the complex cognitive interactions like the expression of emotions in speech which can hardly be comprehended by programmed algorithms.

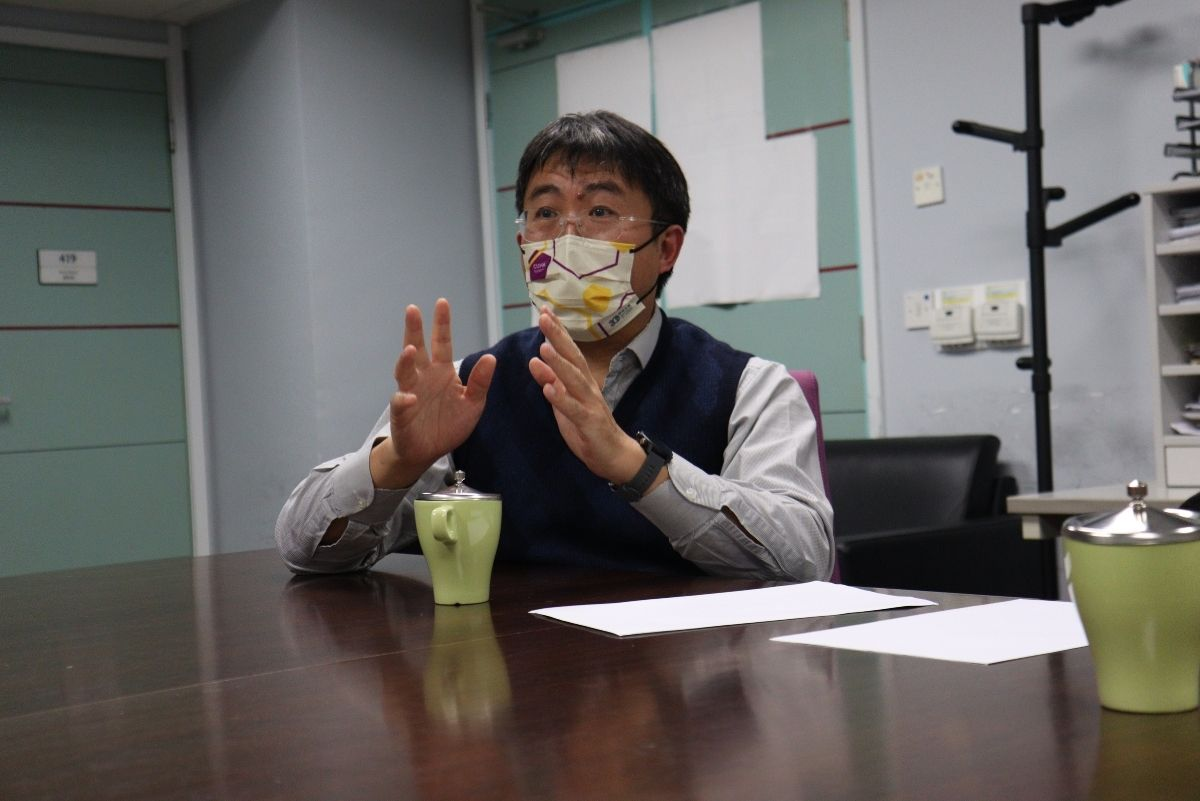

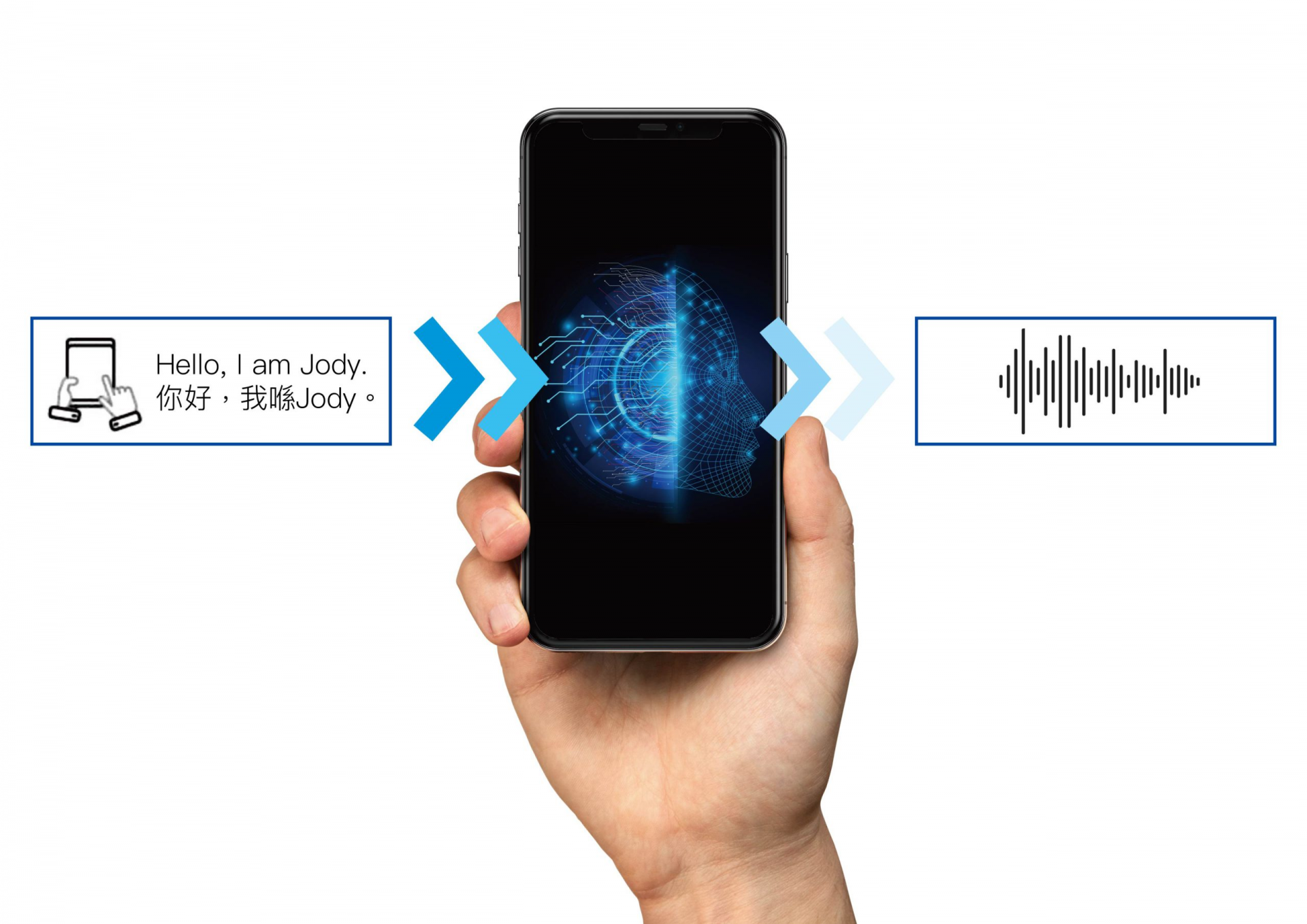

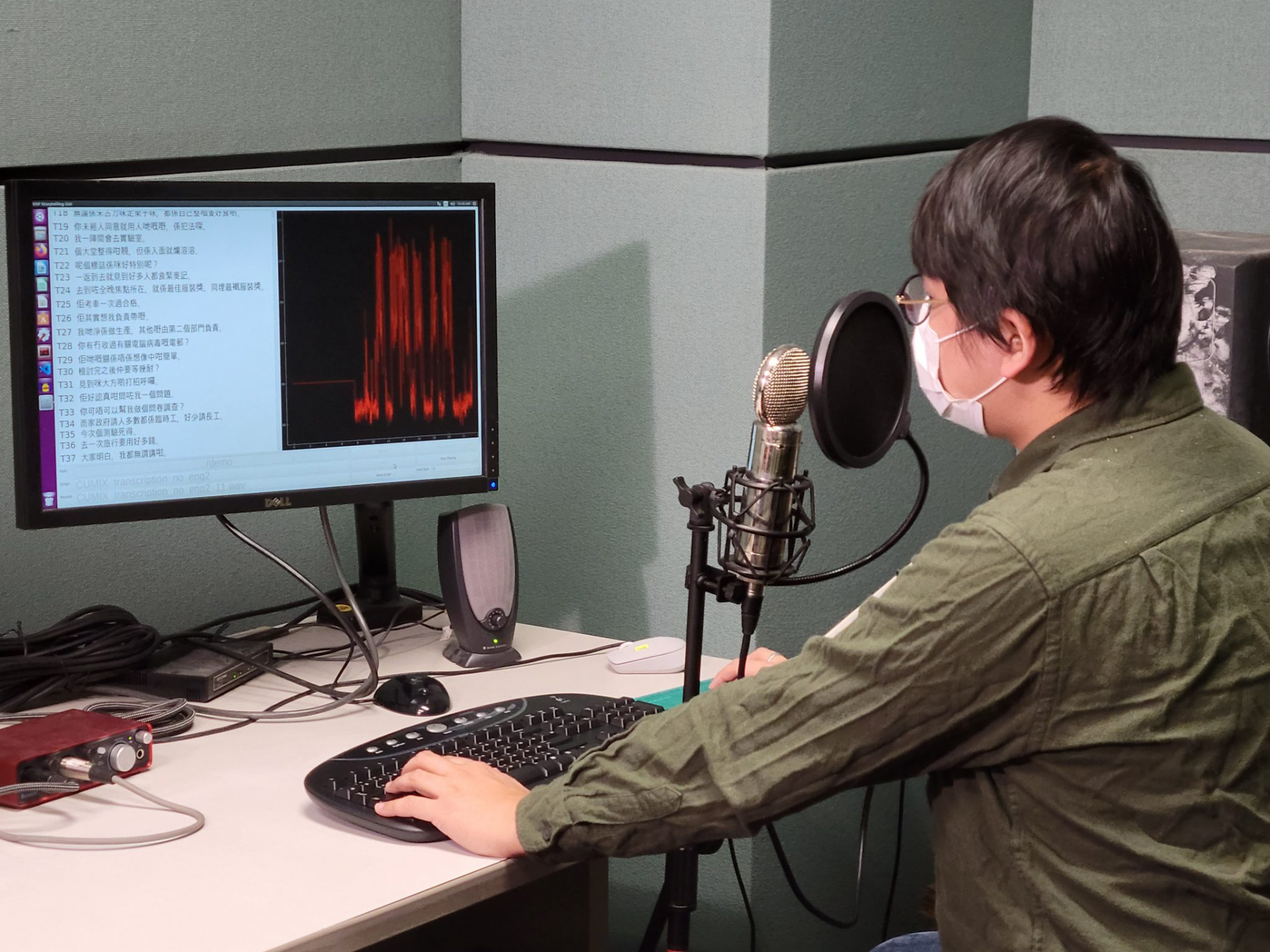

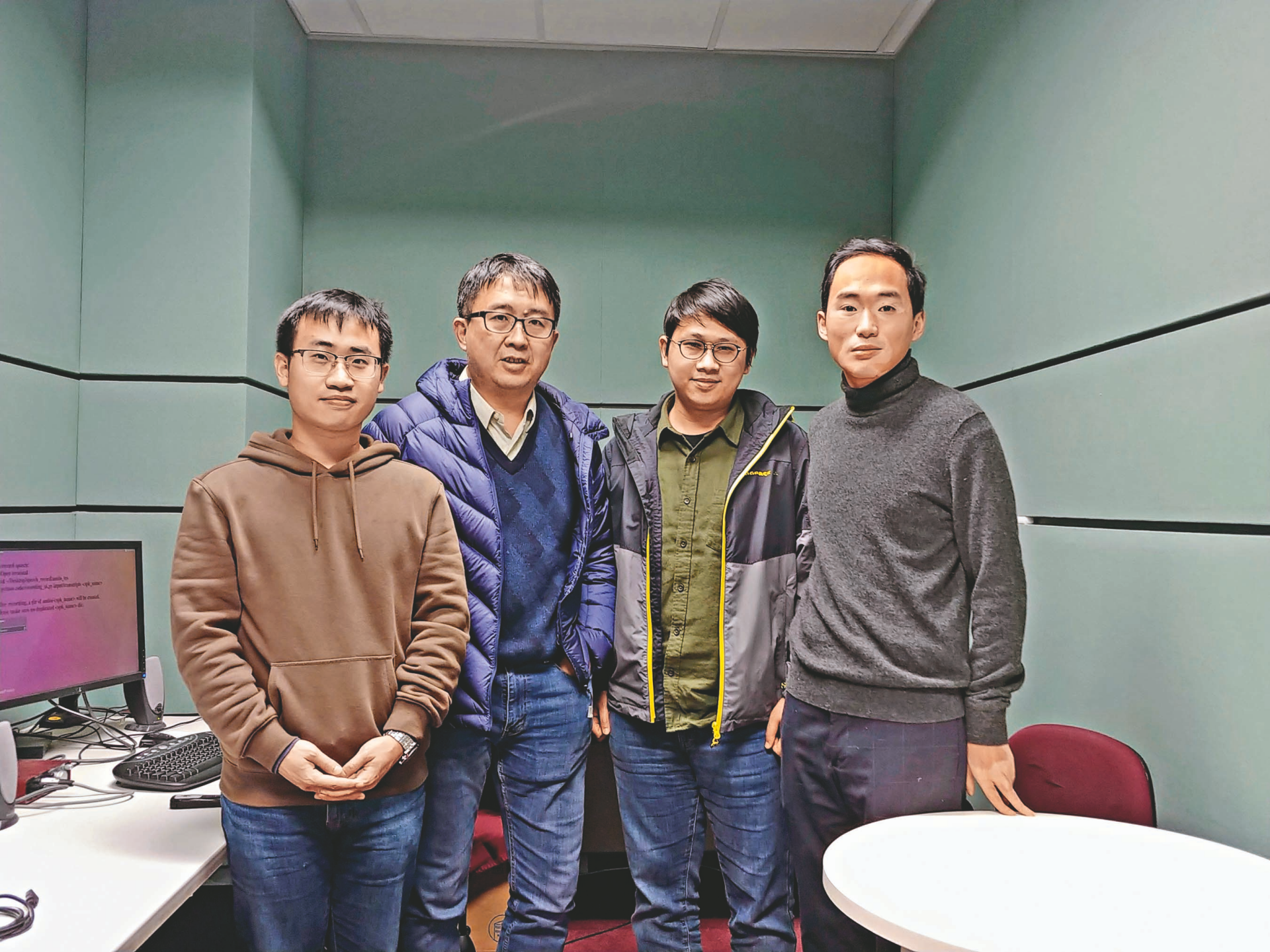

As a specialist in deep learning, speech and audio signal processing, Professor LEE Tan of the Department of Electronic Engineering has developed spoken language technologies that enable AI to reproduce a person’s voice. In the Digital Signal Processing and Speech Technology Laboratory, he has developed, for the first time, a personalised Chinese text-to-speech system (TTS). What has drawn much of the community’s attention recently is his team’s success in helping a patient, Jody, with oral-laryngeal cancer to reproduce and “clone” her own voice with AI speech technology. The system synthesised the Jody’s voice characteristics and speaking styles and allowed her to “speak” just by typing on the mobile phone.

The AI tries to learn as much as possible about the voice of the patients from the recorded data, or what is called training data, before their ability to speak is lost entirely. A speech synthesis model is then constructed, drawn from the patient’s speech at that time that carries a close resemblance to the original voice. This is then integrated into a mobile app, personal to that patient who can tap out what they want to say and be heard in their own voice. Currently, the team has been in touch with medical colleagues in learning about other diseases like Parkinson’s and amyotrophic lateral sclerosis (ALS) where people might also benefit and to whom the team can offer the option, saving the voices of more silent-to-be.

From Research to Market

-

RESEARCH

-

SOCIAL IMPACT & PUBLIC ENGAGEMENT

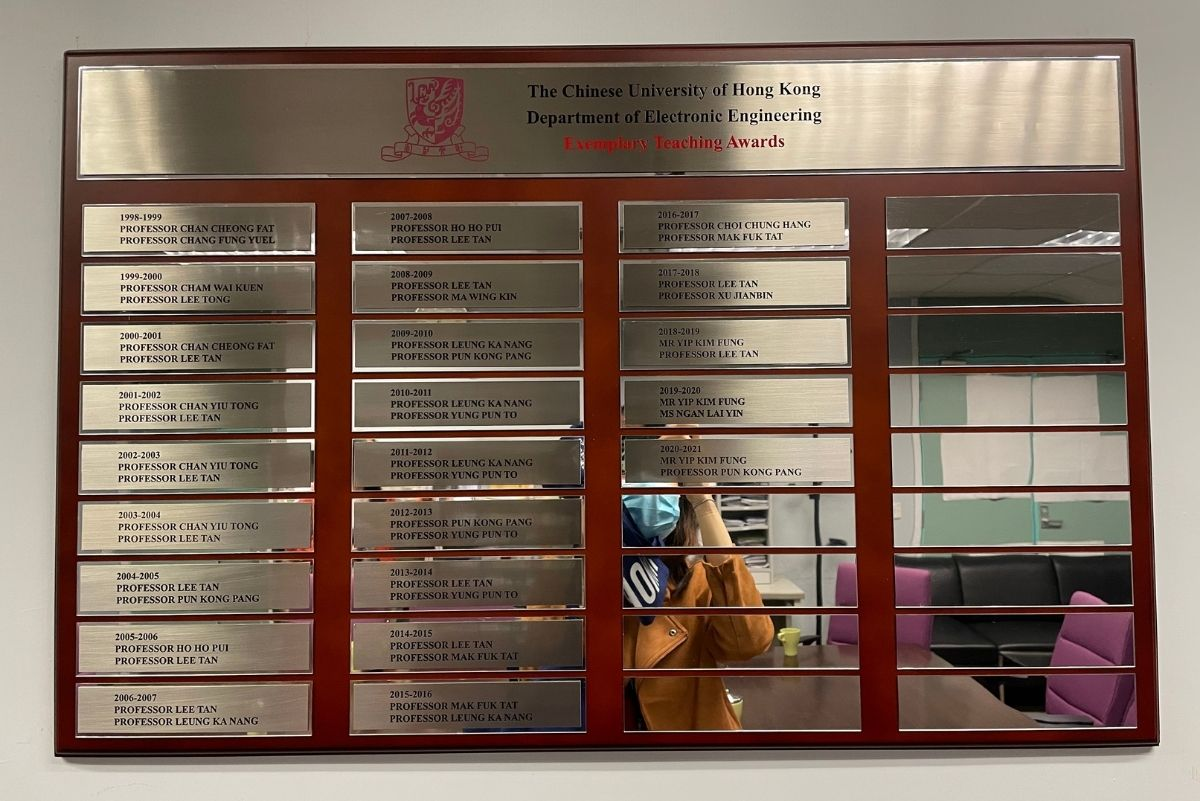

Professor LEE has been working on speech and language-related research since early 90s. His research interests cover speech and audio signal processing, spoken language technology, deep learning models of speech and language, paralinguistics in speech, and neurological basis of speech and language. He has contributed to developing Cantonese-focused spoken language technologies that have been widely licensed for industrial applications.

The application of voice reproduction technology for Jody is derived from Professor LEE’s earlier project “Personalised Child Story Channel” supported by the Knowledge Transfer Project Fund (KPF), in which Professor LEE and his team draw on AI and mobile internet technologies to develop a new mobile application to achieve instantaneous generation and modification of personalised stories in the form of online audio stories. The app provides a brand-new experience for children, parents and teachers, effectively engaging them in the process of story creation and sharing.